It’s somewhat unnerving to hear an AI talking in an eerily friendly tone and telling me to clean up the clutter on my workstation. I am somewhat proud of it, but I guess it’s time to stack the haphazardly scattered gadgets and tidy up the wire mess.

My sister would agree, too. But jumping into action after an AI “sees” my table, recognizes the mess, and doles out homemaker advice is the bigger picture. Google’s Gemini AI chatbot can now do that. And a lot more.

The secret sauce here is a recent feature update called Project Astra. It has been in development for years, and finally started rolling out earlier this month. The overarching idea is to serve an all-seeing, all-hearing, and overtly intelligent AI on your phone.

Google hawks these superpowers under a rather uninspiring name: Gemini Live with camera and screen sharing. Developed at the company’s DeepMind unit, the company began its development as a “universal AI assistant.” It’s a shame the final name isn’t as aspirational.

Let’s start with the access situation. The capability is now available for Pixel 9 and Galaxy S25 users. But if you have an Android phone with a Gemini Advanced subscription to go with it, you can access the new toolkit.

That would be a $20 per month, by the way. I tried it on the two aforesaid phones and now have it ready to roll on my OnePlus 13, as well. The nicest part? You don’t have to go through any technical hoops to access it.

A power/volume button combo, or screen corner swipe to summon Gemini is all you need. Doesn’t matter what app you are running, you can access the new camera and screen-sharing chops as an overlay in every corner of the OS.

Making sense of the world around you

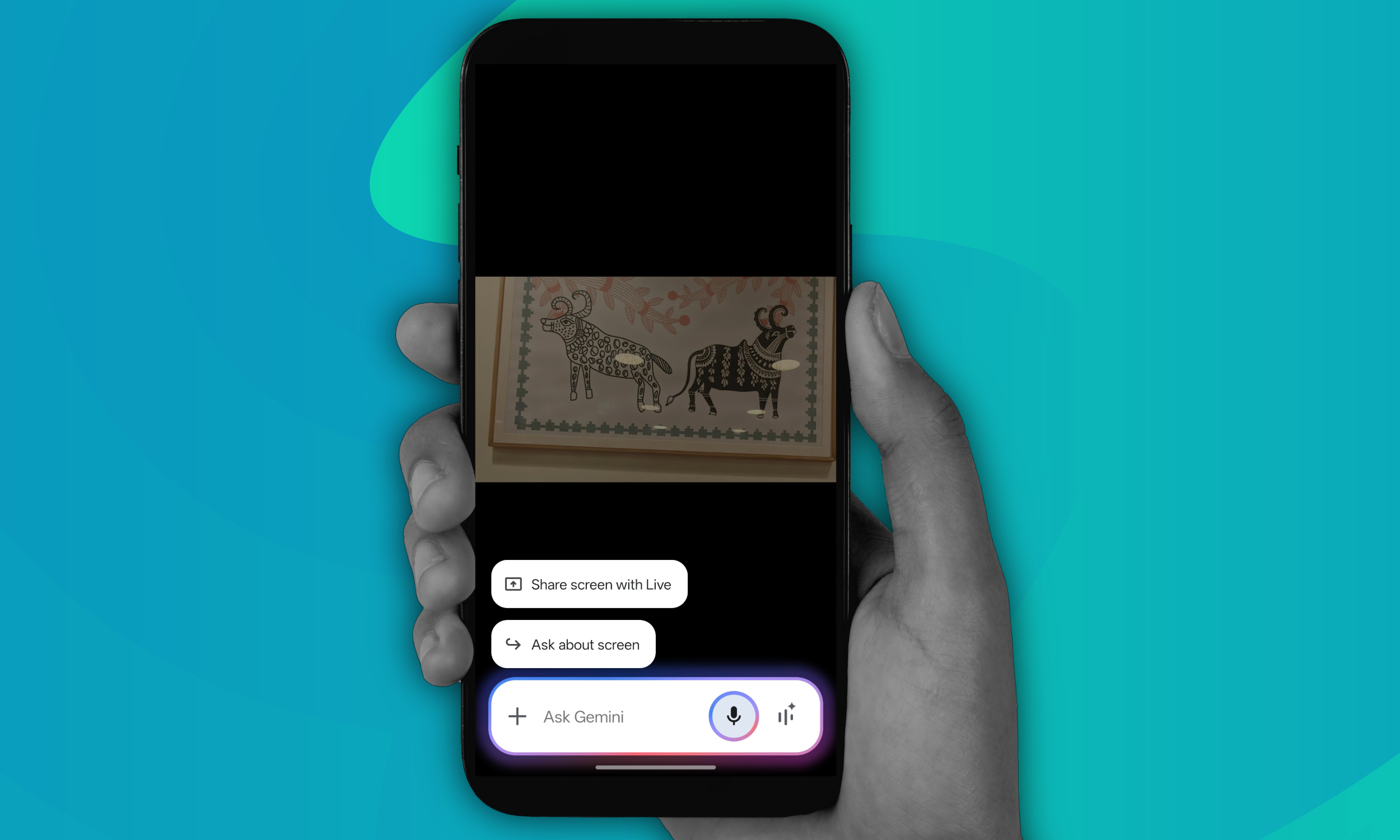

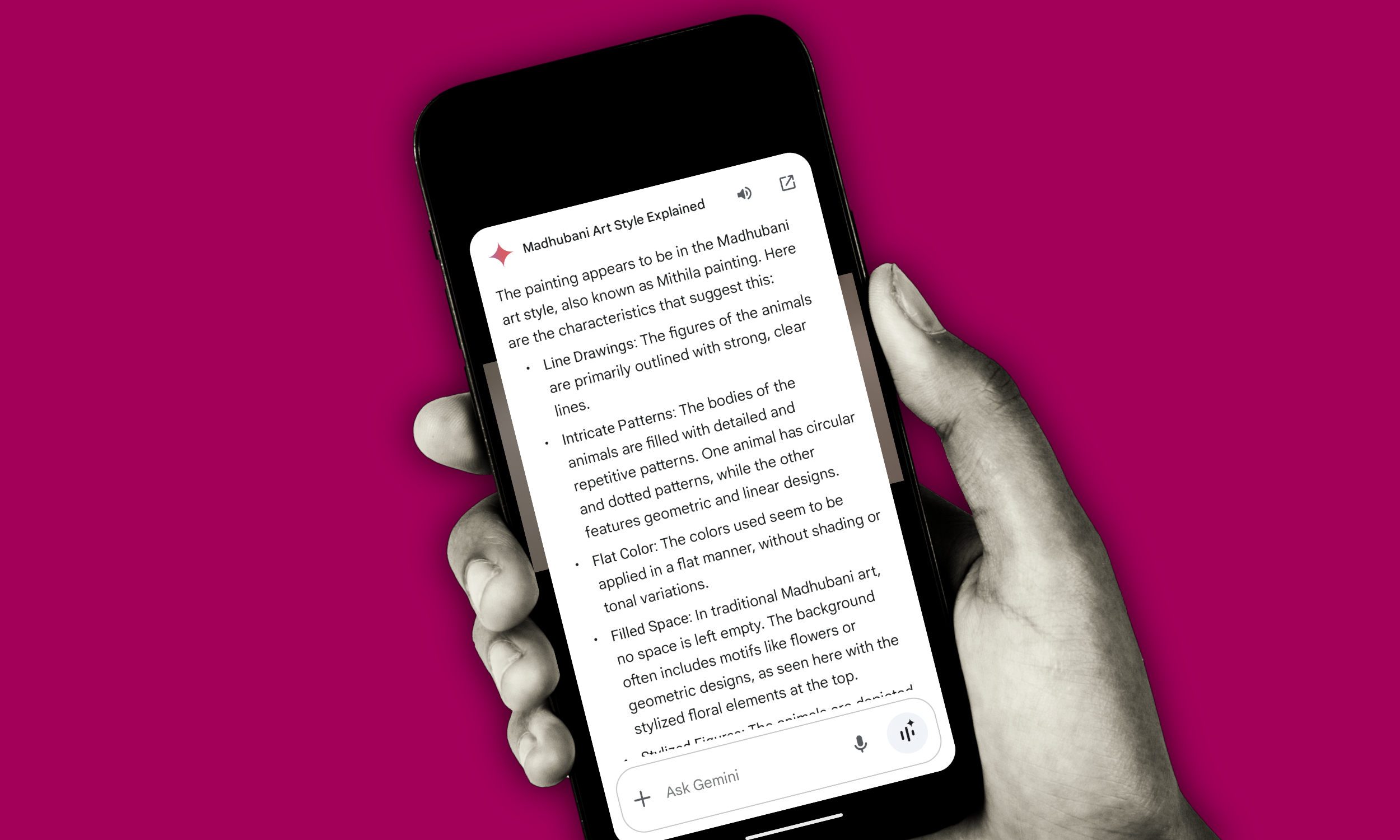

I started by pointing the camera at a painting, and asked about it. Gemini Live was able to accurately detect it as a Madhubani style painting, decoding the bold use of colors and depiction of animals.

It then proceeded to give me a brief history lesson and the variations that have developed over the years. The information was accurate, down to the most granular level. Thankfully, you can also choose to have a text-based back-and-forth with Gemini, if you’re in a place where voice conversations could be awkward.

What I like the most about Gemini Live’s new camera and screen sharing avatar is that it’s not exceedingly chatty. You can interrupt it at any given moment, which only adds to the “natural” appeal of the conversations.

I tried Gemini in a variety of scenarios. I was not prepared for it.

The answers it provides are usually succinct, as if it wants to give you a chance (or even nudge) to ask a follow-up question instead of giving an overwhelmingly long answer. It excels in a whole range of topics and visual scenarios, but there are a few pitfalls.

It can’t use Google Lens yet, which means Gemini can’t compare the images it sees on your phone’s screen against matching results on the web. Moreover, it can’t access information in real-time if you ask Gemini to look up the latest developments around a topic or personality.

I asked it about plant species, restaurant listings, picking up data from notice boards, and making sense of my medical prescription for a recent bout of flu. Gemini fared pretty well, more so than I’ve ever experienced the AI chatbot perform so far.

Unlocking a knowledge bank

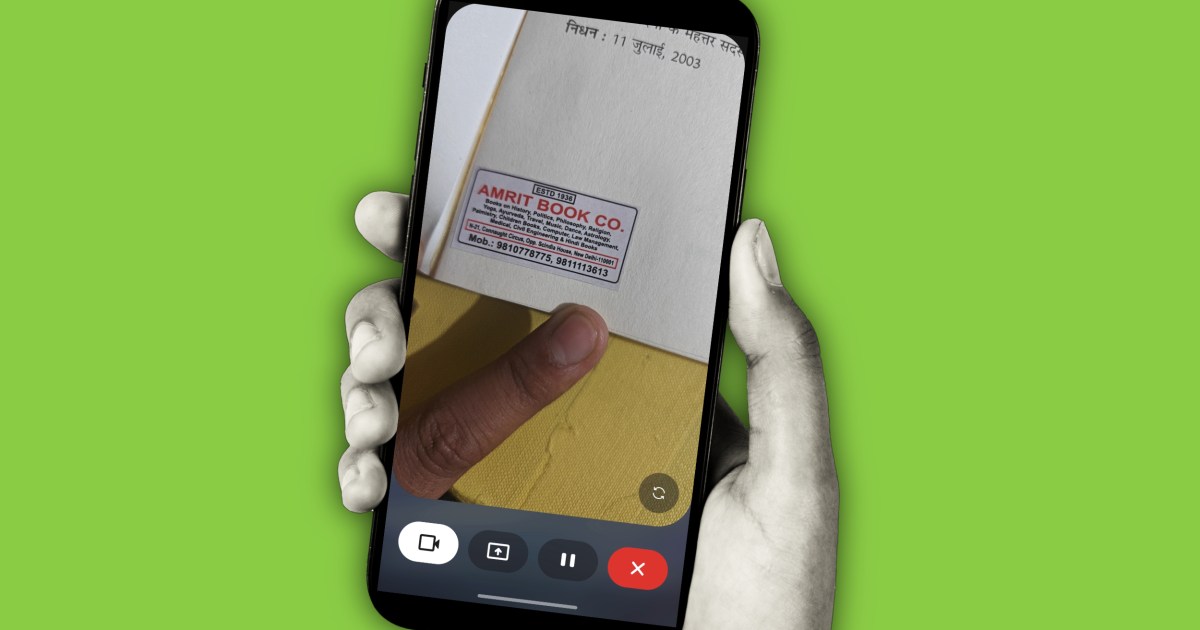

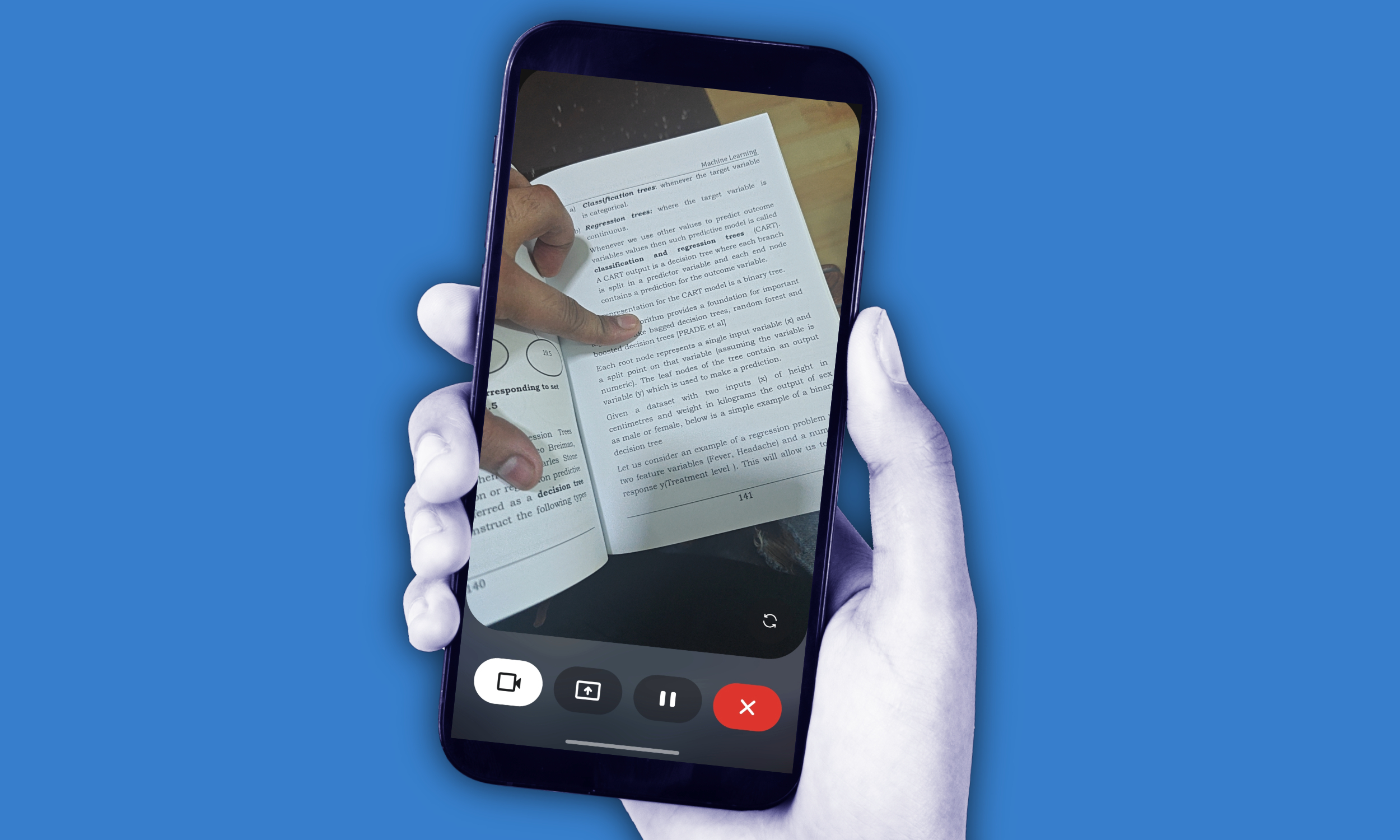

Next, I pushed Gemini to make sense of complex academic material. I put a book on Machine Learning in the camera frame. Gemini Live not only recognized it, but also proceeded to give me an overview of the book’s contents and its core subjects.

Curiously, I started flipping through the pages and landed on the chapter list. The AI recognized the progress, stopped talking, and asked me whether I was interested in any particular chapter now that I was checking out the topic list.

I was taken aback by surprise at this moment.

I asked it to break down a few complex topics, and the AI did a respectable job, even going beyond the scope of on-page material and pulling information from its expansive knowledge bank.

For example, when I asked it about the contents of the introductory page on Bhisham Sahni’s seminal novel, Tamas, the AI correctly picked up the mention of the Sahitya Akademi Award. It then went on to mention details that were not even listed on the page, such as the year it won the prestigious literary honor and what the book is all about.

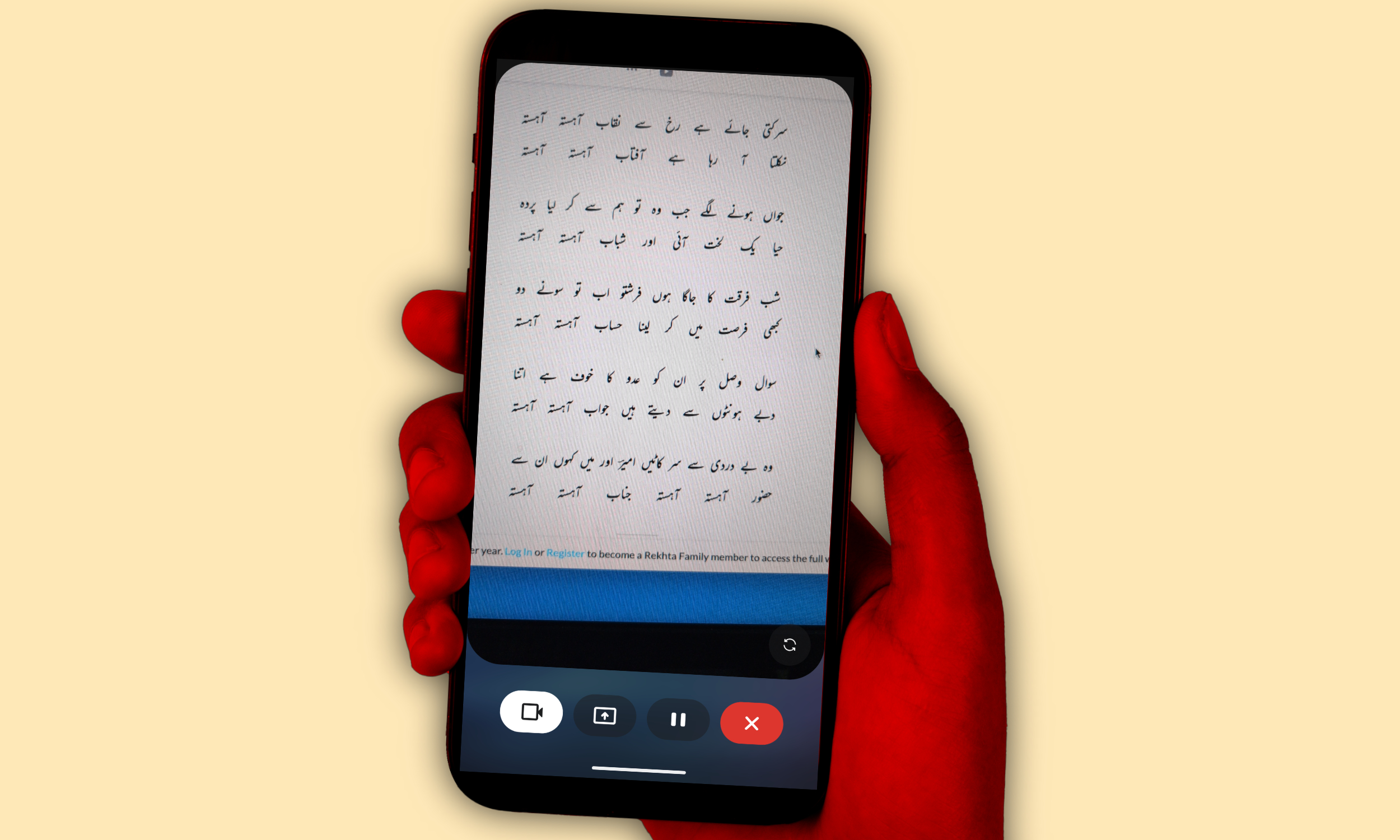

On the flip side, the Hindi language readout by Gemini Live was horrible. It was not just the poor accent, but the fact that Gemini was uttering pure gibberish and no-words repeatedly. While trying to read Urdu, Persian, and Arabic, it did a considerably better job, but often mixed up words from random lines.

On my first attempt with Urdu poetry, it recognized not only the Urdu text, but also gave an accurate summary of the poem. The biggest challenge, once again, was narration. Hearing an anglicized version of Urdu really hurt my ears.

Excels in surprising spots

AI is a fantastic problem-solving tool, and there are numerous benchmarks to prove it. I tested it against physics problems dealing with thermodynamics, electrochemical equations, and statistical problems appearing in a handwritten notebook. Gemini Live did a fantastic job at such tasks.

It even excelled at creative chores, too. My sister, who is a fashion designer, presented one of her sketches in the camera view, and asked for feedback as well as improvements. Gemini Live started with praising the design, drew parallels with a few fashion brands’ design ideology, and made a handful of recommendations.

When prodded further, the AI also advised my sister on the best tools for converting hand-drawn sketches into digital concepts. It followed those words of guidance by providing helpful information on the software stack and where one could find learning material.

When I put a couple of Duracell batteries in the camera view, it not only recognized them accurately, but also told me the hyperlocal e-commerce platforms that can deliver them to me within minutes.

The services – named Blinkit and Swiggy Instamart — are only available in India and mostly reserved for urban locales. Even in a dimly lit room, it was able to identify a pair of wired earphones in the first attempt.

Situation awareness is its strong suit.

Compared to your usual Gemini chat or what you find in the AI overviews section of Google Search, the Gemini Live conversations take a more cautious approach to doling out knowledge, especially if it’s sensitive in nature. I noticed that topics such as food recommendations and medical treatment are handled with an increasingly cautious approach, and users are often nudged to find the right expert resource.

A few familiar pitfalls

My overwhelming takeaway is that Gemini’s “Project Astra” makeover is mighty impressive. It’s a glimpse into the future of what smartphones can achieve. With a few improvements, integrations, and cross-app workflows, it can make Google Search feel like an outdated relic. But for now, there are a few glaring flaws.

On a few occasions, I did notice that the memory system goes haywire. When asked the AI to identify a fitness band in the camera view, it correctly recognized it as the Samsung Galaxy Fit 3. But when I pushed a follow-up question, it erroneously perceived the device as a fitness band from Huawei.

It can also blatantly lie. And quite confidently, I might say. For example, when I told it to summarize my review of the wearable device, the AI responded that Digital Trends hasn’t reviewed it yet. In reality, the article was published a week ago.

Next, I asked it to go through a few articles on my author page after I enabled screen sharing. Gemini did a decent job at explaining the stories, but occasionally stumbled at contextual understanding. For example, it incorrectly mentioned that only Intel and AMD can make NPUs that qualify for the Copilot+ badge.

The article, on the other hand, clearly mentions that Qualcomm was the first to meet that criteria, ahead of the competition. And that it was only late last year that AMD and Intel could finally level up and meet that AI chip baseline with a new portfolio of processors.

Midway through the conversation about an article, it again ran into a memory issue. Instead of summarizing the story that was being discussed, it went back to talking about the first article that it saw via screen sharing. When I interrupted it mid-way through the narration, Gemini fixed its mistake.

Another issue I noticed with narration of non-English languages is that Gemini Live randomly changed the voice and pace midway through the narration. It was quite jarring, and the pronunciation was absolutely mechanical, far different from its human-like English conversational skills.

The machine vision struggles are also apparent against stylistic fonts. On a few occasions, it confidently spat out wrong information, and when asked to correct itself, the AI expressed inability to find the latest information on that topic. Those scenarios are rare, but the Gemini errors are here to stay.

To sum it all up, I think Gemini Live with camera and screen sharing is one of the biggest leaps AI has made so far. It is one of the most practically rewarding implementations of generative AI so far. All it needs is a dash of diversity and a fix for its “confident liar” syndrome.

Things are definitely on the right track now, and overwhelmingly so, but still a few crucial milestones away from being the perfect AI companion of techno-futuristic dreams.

Read the full article here