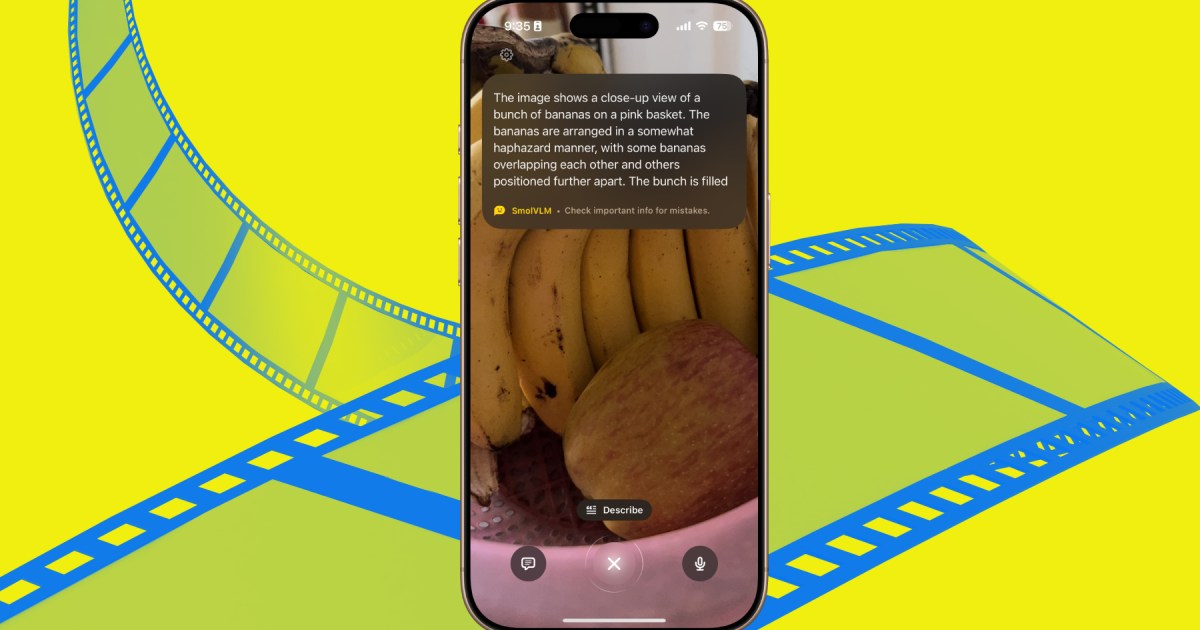

Machine learning platform, Hugging Face, has released an iOS app that will make sense of the world around you as seen by your iPhone’s camera. Just point it at a scene, or click a picture, and it will deploy an AI to describe it, identify objects, perform translation, or pull text-based details.

Named HuggingSnap, the app takes a multi-model approach to understanding the scene around you as an input, and it’s now available for free on the App Store. It is powered by SmolVLM2, an open AI model that can handle text, image, and video as input formats.

The overarching goal of the app is to let people learn about the objects and scenery around them, including plant and animal recognition. The idea is not too different from Visual Intelligence on iPhones, but HuggingSnap has a crucial leg-up over its Apple rival.

Please enable Javascript to view this content

It doesn’t require internet to work

All it needs is an iPhone running iOS 18 and you’re good to go. The UI of HuggingSnap is not too different from what you get with Visual Intelligence. But there’s a fundamental difference here.

Apple relies on ChatGPT for Visual Intelligence to work. That’s because Siri is currently not capable of acting like a generative AI tool, such as ChatGPT or Google’s Gemini, both of which have their own knowledge bank. Instead, it offloads all such user requests and queries to ChatGPT.

That requires an internet connection since ChatGPT can’t work in offline mode. HuggingSnap, on the other hand, works just fine. Moreover, an offline approach means no user data ever leaves your phone, which is always a welcome change from a privacy perspective.

What can you do with HuggingSnap?

HuggingSnap is powered by the SmolVLM2 model developed by Hugging Face. So, what can this model running the show behind this app accomplish? Well, a lot. Aside from answering questions based on what it sees through an iPhone’s camera, it can also process images picked from your phone’s gallery.

For example, show it a picture of any historical monument, and ask it to give you travel suggestions. It can understand the stuff appearing on a graph, or make sense of an electricity bill’s picture and answer queries based on the details it has picked up from the document.

It has a lightweight architecture and is particularly well-suited for on-device applications of AI. On benchmarks, it performs better than Google’s competing open PaliGemma (3B) model and rubs shoulders with Alibaba’s rival Qwen AI model with vision capabilities.

The biggest advantage is that it requires less system resources to run, which is particularly important in the context of smartphones. Interestingly, the popular VLC media player is also using the same SmolVLM2 model to provide video descriptions, letting users search through a video using natural language prompts.

It can also intelligently extract the most important highlight moments from a video. “Designed for efficiency, SmolVLM can answer questions about images, describe visual content, create stories grounded on multiple images, or function as a pure language model without visual inputs,” says the app’s GitHub repository.

Read the full article here