As AI tools improve, we keep getting encouraged to offload more and more complex tasks to them. LLMs can write our emails for us, create presentations, design apps, generate videos, search the internet and summarize the results, and so much more. One thing they’re still really struggling with, however, is video games.

So far this year, two of the biggest names in AI (Microsoft and Anthropic) have tried to get their models to generate or play games, and the results are probably a lot more limited than many people expect.

This makes them perfect showcases of where generative AI is really at right now — in short: it can do a lot more than before, but it can’t do everything.

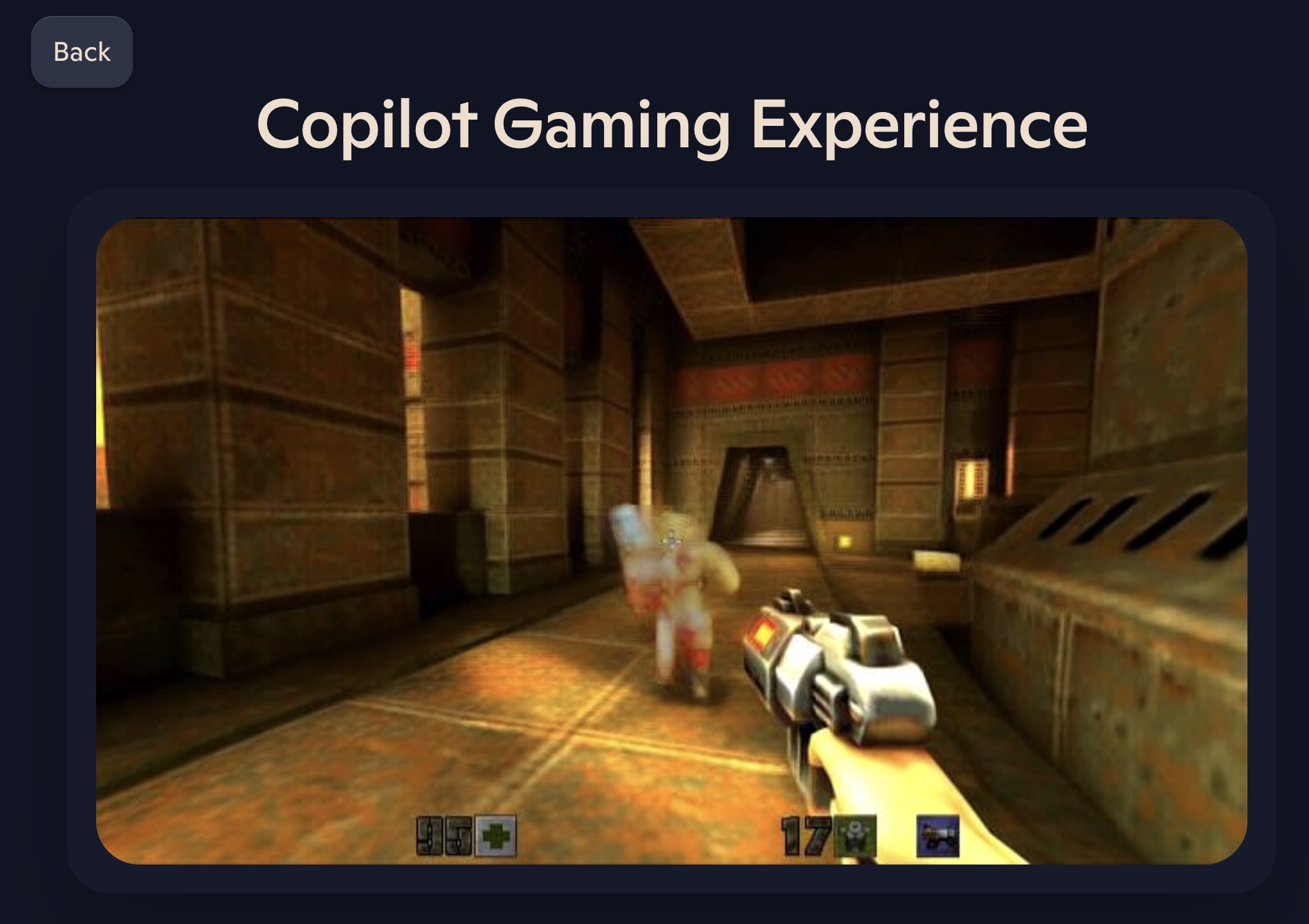

Microsoft generates Quake II

Generating video games has similar problems to generating videos — movement is weird and morph-y, and the AI starts to lose touch with “reality” after a set amount of time. Microsoft’s latest attempt, which anyone can try out, is an AI-generated version of Quake II.

I played it quite a few times and it’s a truly trippy experience, with weird, smudgy enemies appearing out of nowhere and the environment changing around you as you move. Multiple times when I entered a new room, the entrance would be gone when I turned back to face it — and when I looked forward again the walls would have moved.

The experience only lasts a few minutes before it cuts out and prompts you to start a new game — but if you’re unlucky, it can stop properly responding to your inputs even before that.

It’s a great experiment, however, and one I think would be useful for more people to see. It lets you experience for yourself what gen AI is good at, and what its current limitations are. As impressive as it is that we can generate an interactive video game experience at all, it’s hard to imagine that anyone could play this tech demo and think the next Assassin’s Creed will be made by AI.

These kinds of thoughts and assumptions do exist, however, and it’s largely because people can’t escape hearing about AI right now. Even if you couldn’t care less about artificial intelligence, it will still be shoved in your face everywhere you go. The problem is, that the information the average person gets is almost entirely made up of big tech marketing and CEO comments that get picked up by news publications.

That means they hear exaggerated and conflicting claims like these:

It has the potential to solve some of the world’s biggest problems, such as climate change, poverty and disease. (Bill Gates)

Probably in 2025, we at Meta, as well as the other companies that are basically working on this, are going to have an AI that can effectively be a sort of midlevel engineer that you have at your company that can write code. (Mark Zuckerberg)

Using AI effectively is now a fundamental expectation of everyone at Shopify. It’s a tool of all trades today, and will only grow in importance. Frankly, I don’t think it’s feasible to opt out of learning the skill of applying AI in your craft. (Tobi Lutke, CEO of Shopify)

We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies. (Sam Altman, CEO of OpenAI)

AI is more dangerous than, say, mismanaged aircraft design or production maintenance or bad car production, in the sense that it is, it has the potential — however small one may regard that probability, but it is non-trivial — it has the potential of civilization destruction. (Elon Musk)

This is all pretty extreme, right? It will both save us and destroy us, it’s both a tool of all trades for professionals and a tool that will replace professionals — and apparently, we could get sci-fi-level AGI as soon as this year. When this is all people hear, they start expecting pretty amazing things from these tools and believing all office workers spend their days conversing with their computers like Star Trek characters.

However, that is not what reality looks like. Reality looks like a trippy, smudgy Quake II with incomprehensible shapes for enemies. ChatGPT-level LLMs really were an exciting breakthrough in 2022, and a ton of fun for everyone to play around with — but for the majority of uses big tech is pushing on us right now, AI just isn’t capable enough. Accuracy levels are too low, instruction-following abilities are too low, context windows are too small, and they’re just trained on internet nonsense instead of real-world knowledge.

But generating a video game is a pretty complex goal — it takes whole teams of humans years to make these things, after all. How about playing video games instead?

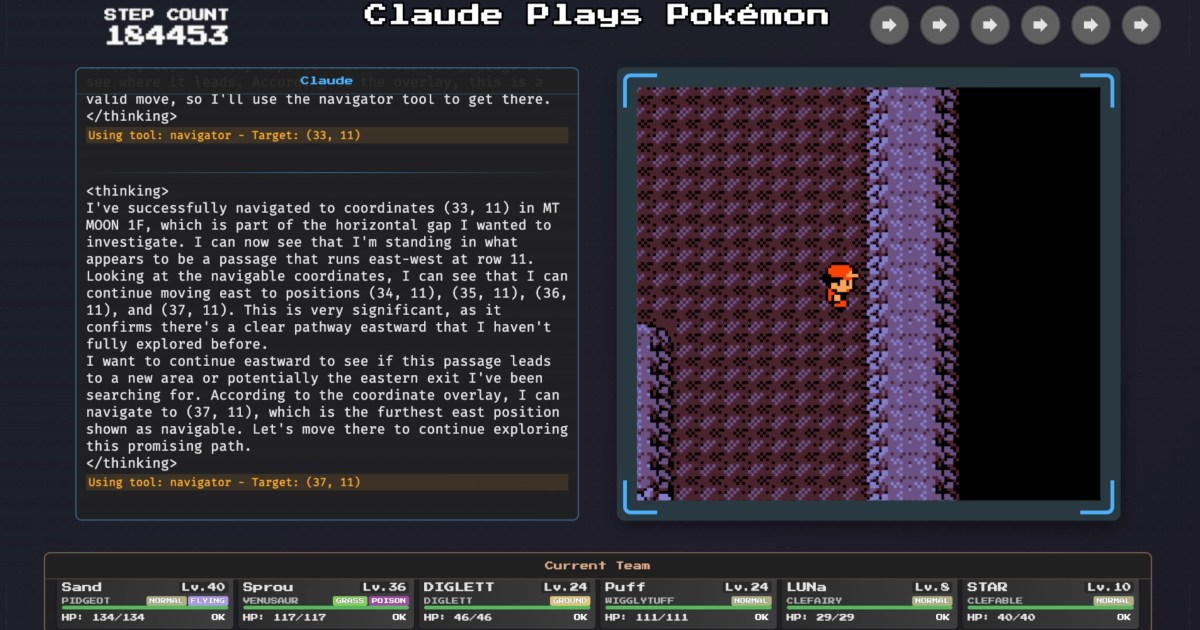

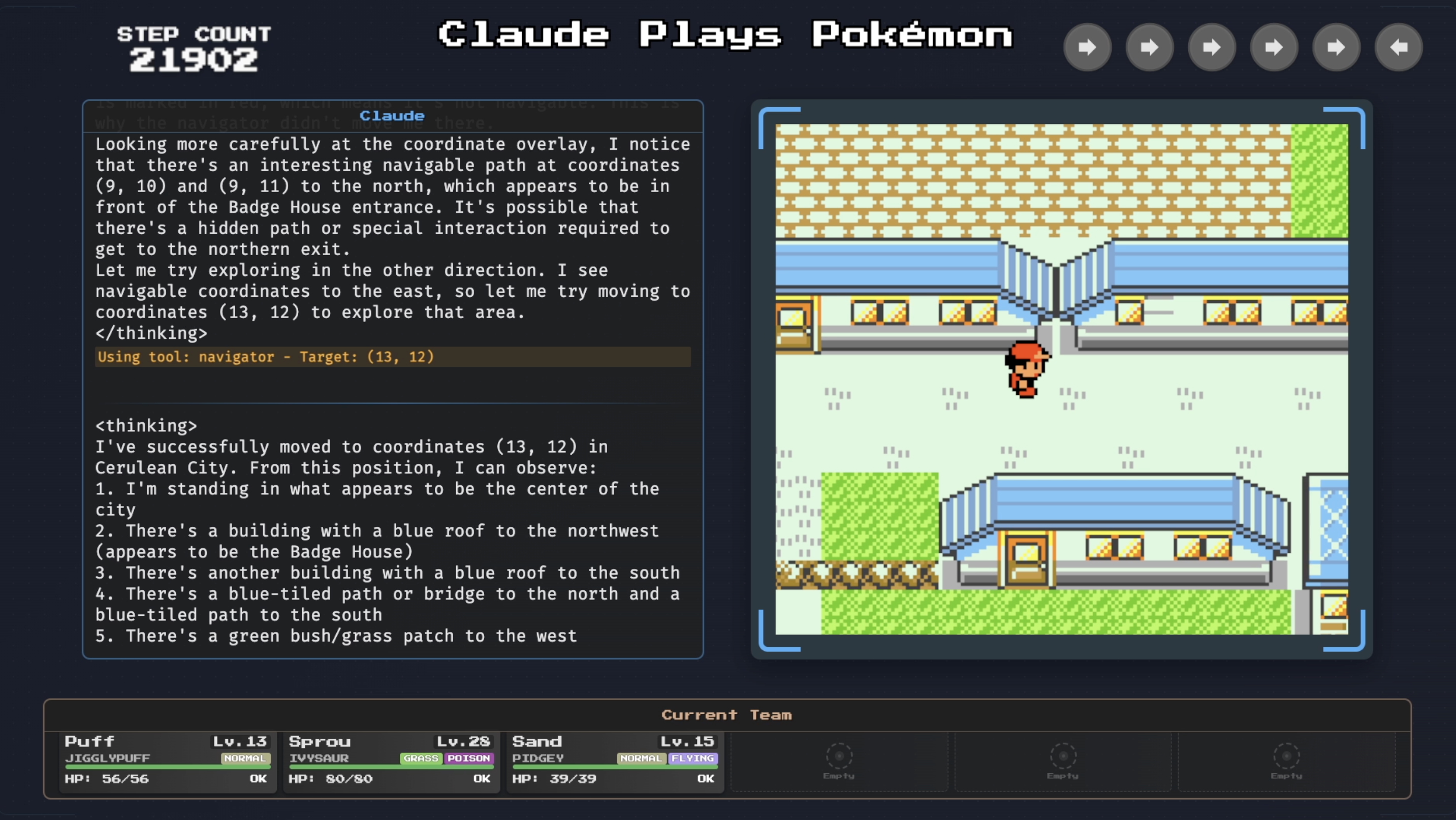

Claude “plays” Pokémon Red

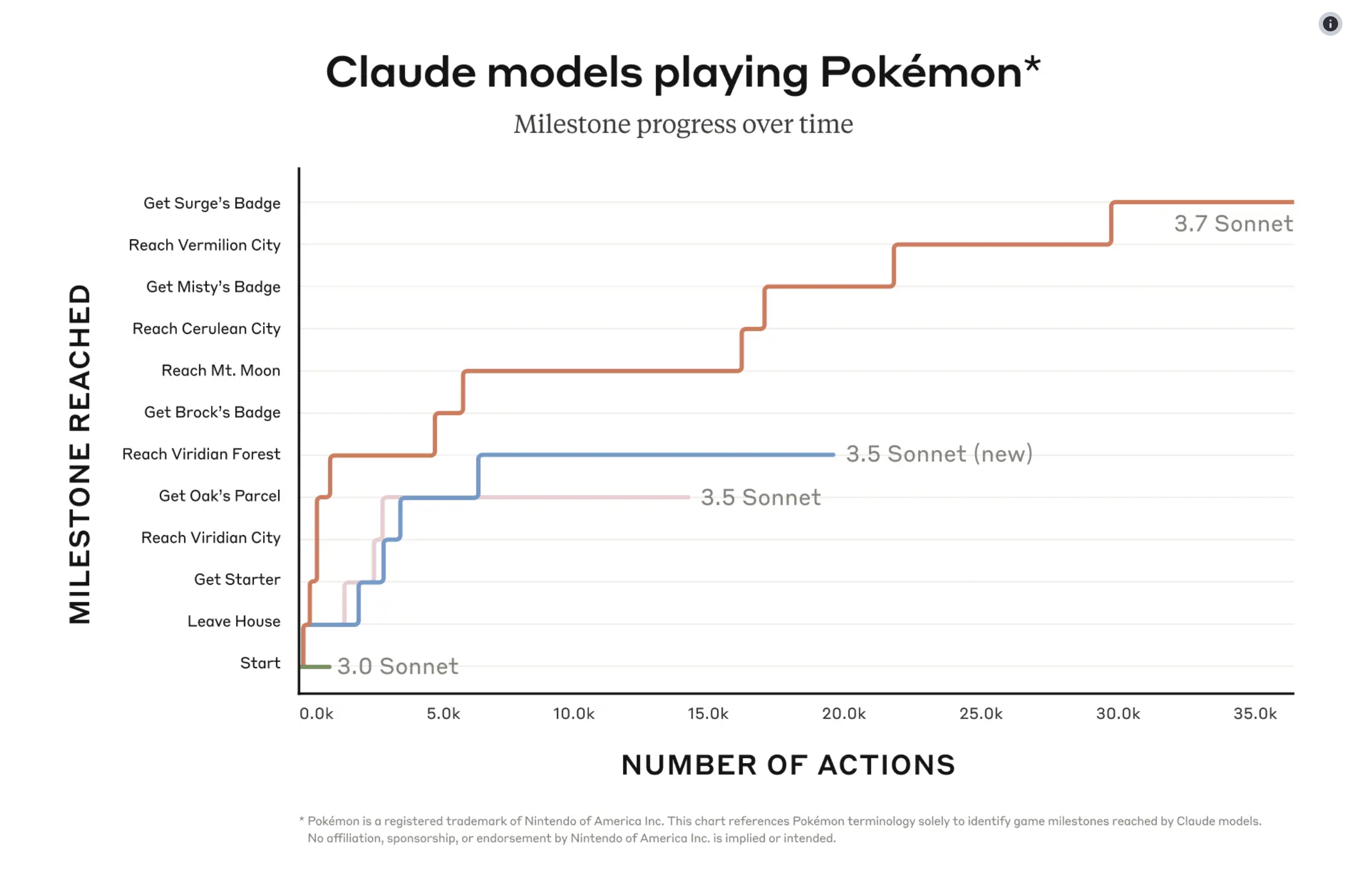

Well, it turns out people are experimenting with that, too. Anthropic’s newest model, Claude 3.7 Sonnet, has been playing Pokémon Red on Twitch for around two months now, and he’s doing the best job an LLM has ever done at playing Pokémon. One slight caveat, however, is that he’s still miles behind the average 10-year-old human.

One of the problems is speed — it takes Claude thousands of actions spanning multiple days to do things like make it through Viridian Forest.

Why does it take so long? It’s not because he can’t figure out how to strategically win Pokémon battles — that’s actually the part he’s best at. Navigating through the environment and avoiding trees and buildings, on the other hand — not so good. Claude has never been trained to play Pokémon, and it’s not easy for him to understand the pixel art and what it represents.

Making it through maze-type areas like Mt. Moon is particularly difficult for him, as he struggles to form a map of the area and avoid retracing his steps. One time, he got himself so stuck in a corner that he concluded the game was broken and generated a formal request to have the game reset.

He’s also not great at remembering what his goals are, what things he’s already tried, or which places he’s already been.

There’s a pretty straightforward reason for that one — LLMs have a finite “context window” that acts as their memory. It can only hold so much information, and once Claude hits the limit, he condenses what he’s got to make room for more. So a piece of information like “Visited Viridian City, entered every building, and spoke to every NPC” might get condensed to just “Visited Viridian City” — prompting Claude to go back and check if there was more to do in the city.

To sum it up: Claude can’t figure out where he’s going, he walks into walls, mistakes random objects for NPCs, forgets where he’s been and what he’s trying to do, and every decision he makes requires paragraphs and paragraphs of reasoning. This isn’t a criticism — these are both exciting experiments that are pushing LLMs as far as they can go.

But with all the hype around AI, it feels important for people to see demos like these and make their own minds up about AI. Certain figures are trying to push the narrative that we’re about to reach the peak — that within years, AI will be beyond even the smartest humans — but I don’t think they’re being sincere, they’re just being salesmen. We’re nowhere near the peak, this whole thing is only just beginning.

Read the full article here